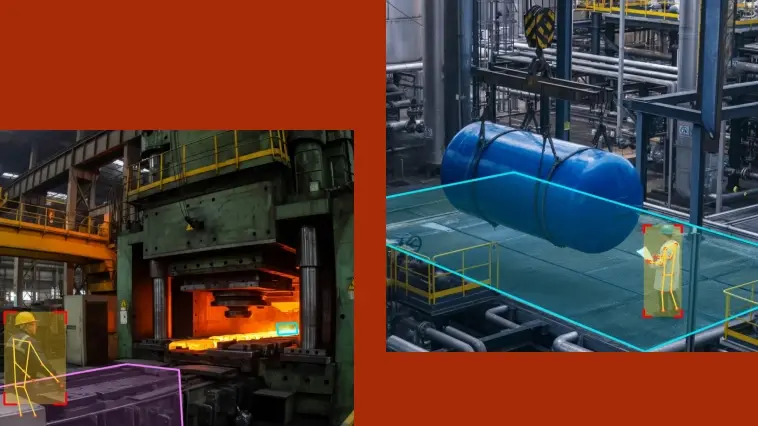

Event

Together for Safety: PPG x Intenseye Global Meetup

Read more

SIF Playbook

Mastering SIF Prevention Through Real-Time Safety Management

Read more

Podcast

Stay Safe with AI: Abby Ferri Part 2

Listen

Press

Building the AI Backbone of Workplace Safety

Read more

.webp)

.webp)